As AI agents powered by large language models (LLMs) continue to evolve, developers face a common challenge:

How do we consistently and securely connect these powerful models to real-world tools, data, and applications?

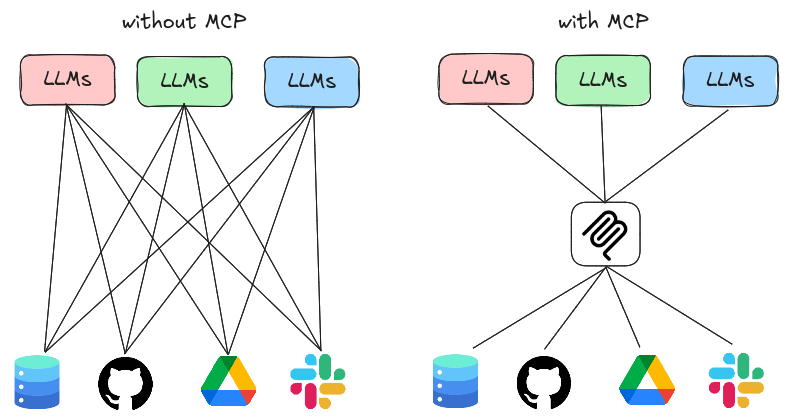

Enter Model Context Protocol (MCP), a groundbreaking solution that standardizes how LLMs interact with the digital world, much like USB-C standardized device connections.

Think of MCP as the USB-C for AI. It’s a universal protocol that allows LLMs to seamlessly plug into:

By defining a shared format and communication layer, MCP ensures that AI agents can interact with their environments in a standardized, modular, and secure way.

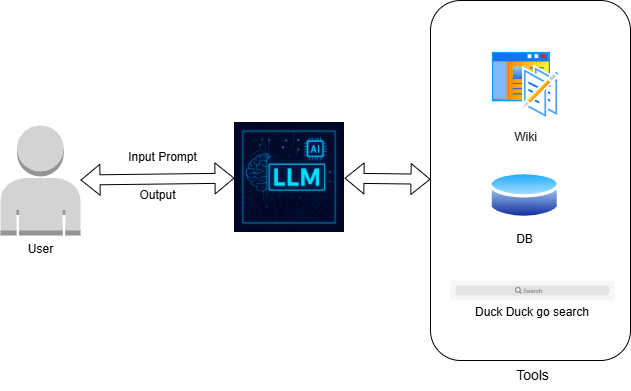

Large Language Models (LLMs) are AI systems trained on massive datasets. They can understand, generate, and manipulate human-like text and much more.

LLMs can:

When LLMs are paired with tools, they become Intelligent Agents—capable of taking action, automating tasks, and interacting with real-world systems.

Examples of what agents can do:

But to make all this work smoothly, agents need a common protocol—that’s where MCP shines.

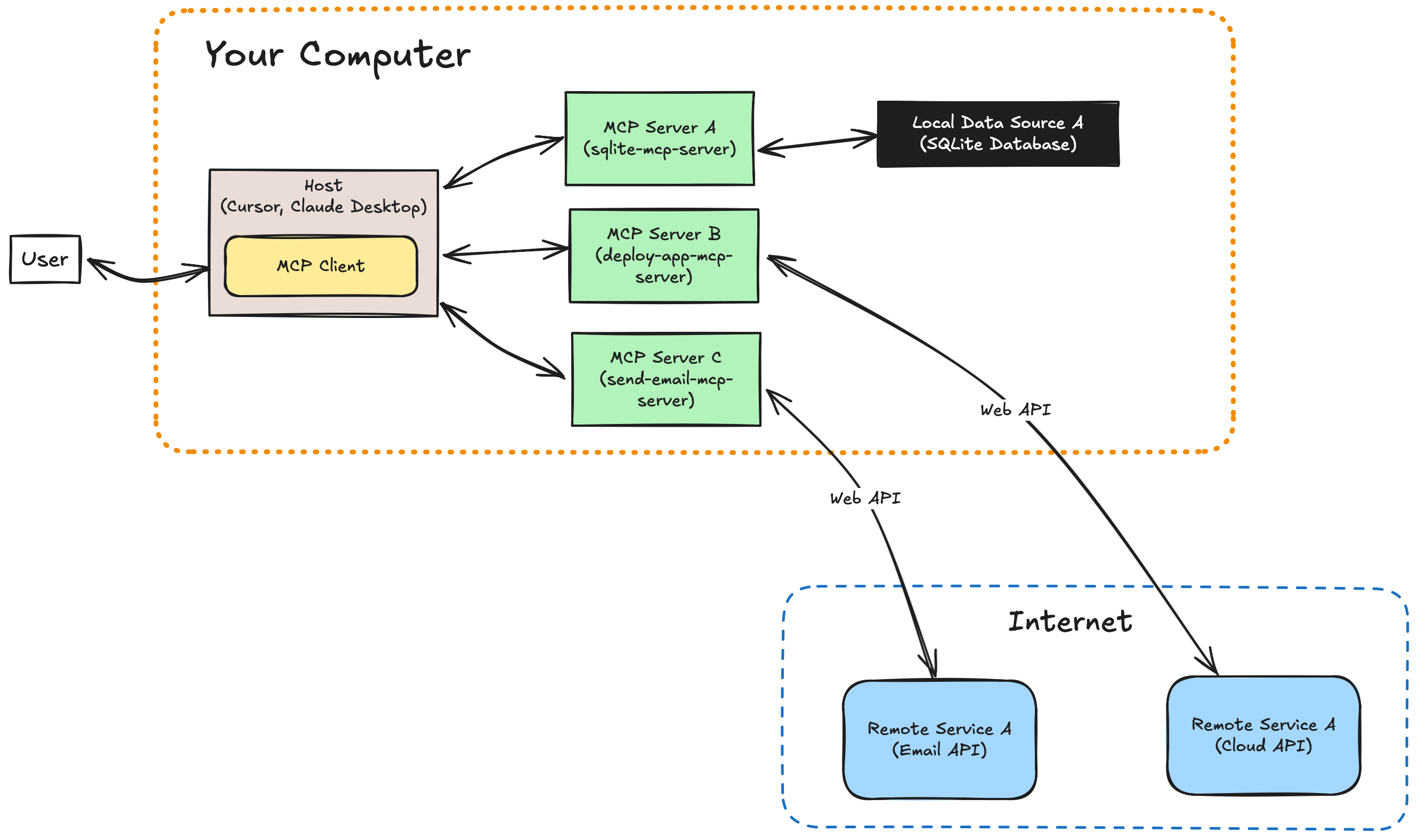

MCP offers a well-defined architecture to bridge LLMs and external systems:

MCP Architecture Overview:

This setup allows developers to create modular, interoperable agent environments, independent of specific models or frameworks.

MCP is already being adopted by some of the leading names in AI:

Without MCP, developers often reinvent the wheel, writing custom integrations, managing context manually, and struggling to scale agent capabilities.

With MCP, you can:

MCP is the missing link that bridges the gap between raw AI power and real-world usability. It gives developers a common language to build, scale, and easily compose intelligent agents.